AI writes code. Engineers build systems.

AI consultancy and outsourcing

Why thinking in systems, not prompts, still runs the world

This is a guest post with Alexandre Zajac, SDE II at Amazon and founder of the Hungry Minds community read by 50k+ engineers from big tech and startups.

AI didn’t kill engineering. It just exposed how few people were actually doing it.

We live in an era of AI hype and FOMO, where every scroll promises a new algorithm, every LinkedIn post declares that AI will replace your job, and every “expert” sells a shortcut to success.

But under the surface of all this noise, something dangerous is happening: we’re starting to forget what real engineering looks like.

Key takeaways

- AI writes code, but engineers design systems. Real engineering lies in solving ambiguous problems, not generating syntax.

- AI lacks context, judgment, and ethics. A machine will never be held accountable for the damage it causes. A human is, and can mitigate it too.

- The future belongs to those who ask “why”. AI can replicate the past, but only humans can invent what comes next.

If AI can write code, what’s left for engineers?

Writing code is no longer the bottleneck. AI can do that. But knowing what to build, why it matters, and how to keep it running, that’s still our job.

Because, despite what the flashy demos tell you, AI is not a replacement for engineers. It’s a very clever intern. And it needs guidance. Direction. Judgment.

Foundation still matters.

In fact, it matters more than ever.

Because tools come and go, but principles last.

There are hundreds of new tools each month. If you try to catch up with all of them, you will end up in the wrong place. So real engineering isn’t about the latest framework, LLM, or AI tech, it’s about thinking in systems, understanding system principles, and applying structured methods to messy, ambiguous problems.

Real engineering isn’t about knowing the latest framework or playing with AI wrappers. It’s about thinking in systems, understanding constraints, and applying structured methods to messy, ambiguous problems.

Trends are good, but they are dangerous.

They create the illusion that you understand things because you know how to use tools.

Do tools make you an engineer?

As Grady Booch once said, “A fool with a tool is still a fool.” And right now, we’ve handed incredibly powerful tools to people who haven’t learned how to think like engineers. It’s not a tech problem, it’s a thinking problem.

That’s why so many AI-first projects collapse. Because they start with “look what this can do” instead of “what problem are we solving?”

Engineering is not about copy-pasting code. It’s about reasoning through edge cases, planning for failure, and building things that work beyond the prototype.

That kind of thinking can’t be prompt-engineered. It takes discipline, not dopamine.

AI didn’t kill engineering. It just exposed how few people were actually doing it.

What does it mean to think like an engineer?

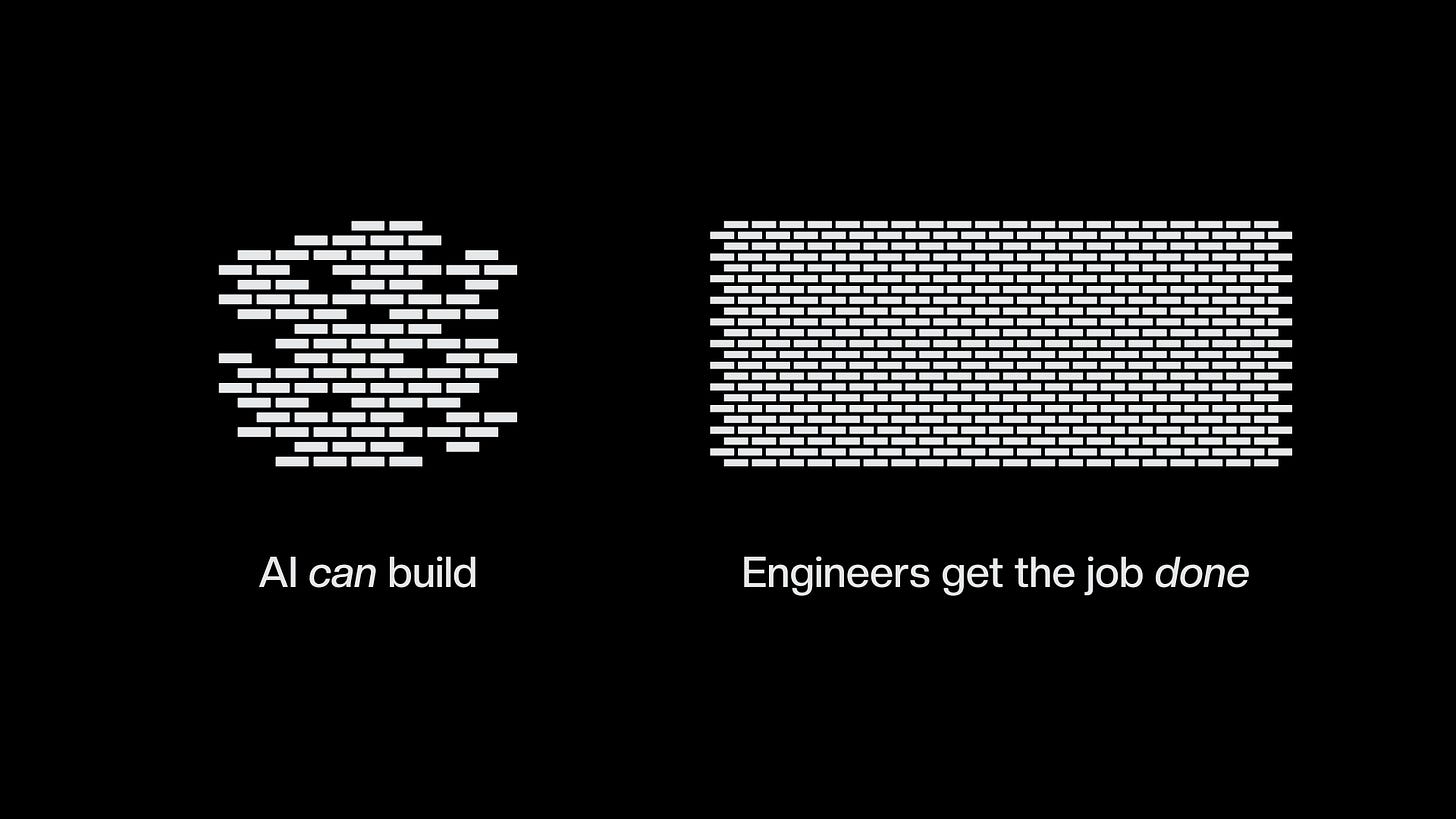

Engineers think differently: AI writes code, engineers design systems.

Let’s get one thing straight:

AI is good at generating code.

It can churn out functional snippets faster than any human coder. But code is just the building block. Engineering is about building systems, and that’s where AI falls short.

Imagine building a skyscraper with bricks but no blueprint.

That’s AI without systems thinking.

Why do AI-first startups collapse?

Take Y Combinator’s 2025 cohort as a perfect example.

A quarter of the startups in this batch reported that 95% of their code was written by AI (TechCrunch). Yet, these startups still leaned heavily on human engineers to debug, optimize, and integrate those components into scalable, cohesive architectures.

Why?

Because AI doesn’t think in systems.

It doesn’t understand how a database schema impacts API performance or how a caching layer can bottleneck under load.

Engineers do.

We can draw out 2 examples (out of the many more) to illustrate that:

- Once a robotics darling, Anki shut down despite raising over $200 million. Their system lacked coherence. Their AI couldn’t balance long-term cost, scalability, and UX.

- Ghost Autonomy, which aimed to bring autonomous driving to consumer vehicles, collapsed in 2024 after missing deadlines and pivoting multiple times (TechCrunch). They had the AI-powered tech but couldn’t integrate it into a scalable, real-world system.

As much as we (don’t) like it, engineers excel in the trade-offs:

- “Do we optimize for speed or security?”

- “Will this scale when 10M users hit at midnight?”

- “How do we future-proof this against next year’s tech?”

Can AI be trusted with ethics and accountability?

AI doesn’t care. It just generates.

It lacks a moral compass. Engineers build with principles.

AI doesn’t care about ethics, has no conscience, processes data, and follows patterns, but it can’t weigh the moral implications of its actions.

GitHub Copilot regurgitates copyrighted code.

Clearview AI scraped 3B faces without consent.

And in 2023, an algorithmic “immigration adviser” wrongly deported 2,000 people.

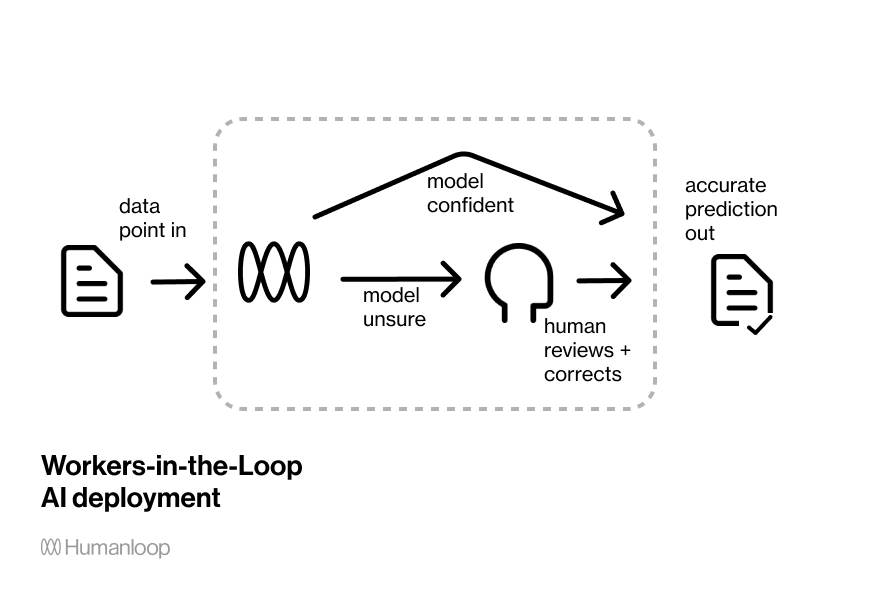

We need humans in the loop.

People today trust software because they trust the people and companies that built it. Humans need to see the reasoning behind those decisions, and they need to know that someone is accountable:

GDPR compliance isn’t a checkbox; it’s designing data flows that respect users.

Forbes reports that 67% of consumers distrust AI decisions without human oversight.

Engineers pulled the plug when IBM’s Watson suggested “unsafe and incorrect” cancer treatments.

AI doesn’t lose sleep over ethics. You do.

What happens when AI meets legacy systems and the real-world mess?

The real world isn’t a clean slate. It’s messy, full of legacy systems and spaghetti code that AI can’t untangle.

Take banking or government systems, for instance. Decades-old mainframes that still run critical operations on COBOL.

AI tools might generate fancy new code, but they struggle to integrate with or refactor these undocumented, patchwork systems.

Startups like Ridecell succeeded by bridging AI with legacy infrastructure, but that required human expertise to navigate the complexity.

Then there’s quality assurance (QA).

AI is great at generating code, but it’s terrible at testing it.

Most AI tools only test the “happy path,” ignoring edge cases and real-world chaos. This is a recipe for disaster.

Engineers, on the other hand, design rigorous testing frameworks that validate systems under every conceivable condition. We enforce standards like SOC2 compliance and ensure resilience in critical industries like aviation or finance.

Simple question: Do you think we’ll arrive at a point where humans will trust more an aviation system designed and tested with AI or by humans?

Why does this matter?

Because in the real world, systems fail. Outages happen. And when they do, it’s engineers who triage the damage (ex: CloudNortic’s ransomware shutdown, where human intervention was crucial to restoring operations).

AI can’t handle that. It doesn’t understand the context or the stakes. Engineers do.

AI won’t create the future, but you will.

The architecture and training processes of contemporary LLMs constrain their capability to generate novel, future-oriented solutions.

AI can automate repetitive tasks and generate code based on what it’s seen before. But here’s the thing: it can’t invent the future. It can’t ask, “What if?”

True engineering isn’t just about solving today’s problems.

It’s about creating tomorrow’s possibilities. AI can assist by providing data and insights, but true invention, the ability to synthesize disparate ideas and push boundaries, remains a human trait.

Blockchain, quantum computing, drug synthesis, weren’t AI’s ideas. They’re human bets on the unknown.

As we look to the future, AI will continue to be a powerful tool. But it’s engineers who will lead the way, shaping how that tool is used to build the technologies of tomorrow.

What’s still ours?

AI will keep getting better, faster, and cheaper. It will refactor our code, automate tasks, and even generate prototypes while we sleep.

And yet the hardest question remains unanswered:

Is there anything left to build, and will it be meaningful?

That question demands more than code.

It demands trade-offs, judgment, ethics, and long-term thinking. It demands systems designed to last, not demos made to impress.

And it demands people, not just coders, but engineers.

Engineers who understand that trust is earned, that constraints are features, and that building for the real world means expecting it to break.

The future doesn’t need more code.

What’s missing are people who really know how to think.

👉 For engineering managers who struggle to scale their solution, we provide free 1:1 consulting services with Alexandru Vesa. Book an intro call here.